AMD Radeon R9 295X2 8GB Video Card Review at 4K Ultra HD

Dual Monitor Power Consumption

One of the things that we noticed with the some of the current AMD Radeon graphics card is that they aren’t as power efficient as NVIDIA GeForce cards when it comes to multi-monitor setups. This is something we don’t often touch on in all of our video card reviews, but we wanted to see how the AMD Radeon R9 295X2 does as it uses a newer Hawaii XT GPUs and we wanted to check how it does when it comes to power efficiency with more than one display.

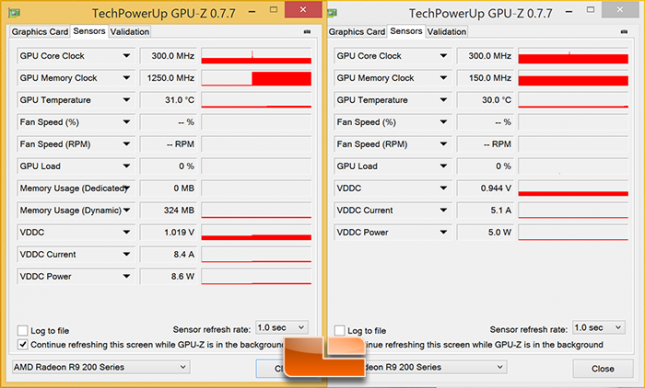

In the GPU-Z screen shots above we have the AMD Radeon R9 295X2 running with one monitor for the first half of the GPU-Z log and then we plug in two monitors. By hooking up the second monitor we found that the power draw significantly increased. Having to push pixels and manage the clocks of two displays does put more strain on the GPU and AMD increases the memory clock speeds from 150MHz to 1250MHz on one card to do this. You also need more voltage an an idle state to run the higher clock speeds and the means more heat and sometimes higher fan speeds. Our temperature went from 26C on both GPUs to 31/31C as a result of hooking up a second display to the video card.

| GTX 780 Ti SLI | GTX 780 Ti SLI | R9 295X2 | R9 295X2 | |

| # of Displays | 1 | 2 | 1 | 2 |

| Core Clock | 324.0 MHz | 324.0 MHz | 300.0 MHz | 300.0 MHz |

| Mem Clock | 162.0MHz | 162.0MHz | 150.0 MHz | 1250.0 MHz |

| Idle Temp | 30C | 30C | 26C | 31C |

| Idle Power | 132W | 136W | 135W | 187W |

| Fan Speed | 17% | 17% | – | – |

| Fan Noise | 39.9 dB | 39.9 dB | 43.5 dB | 43.6 dB |

As you can see there is a pretty big difference in power consumption and temperatures when it comes to adding a second display with an NVIDIA versus AMD graphics card solution. Adding a second display to the NVIDIA GeForce GTX 780 SLI setup caused a higher load on the memory controller, but no increase in the clock speeds or voltages. This led to a modest 4 Watt increase in power draw when a second card was added. The AMD Radeon R9 295X2 on the other hand had to have one GPU’s memory clock run at full 3D speeds and higher therefore the GPU needed more voltage. This caused the temperature of the GPUs to increase and a 52 Watt increase in idle power consumption. NVIDIA clearly has a better grip on multi-monitor power efficiency.