AMD Radeon RX Vega 56 versus NVIDIA GeForce GTX 1070

Temperature & Noise Testing

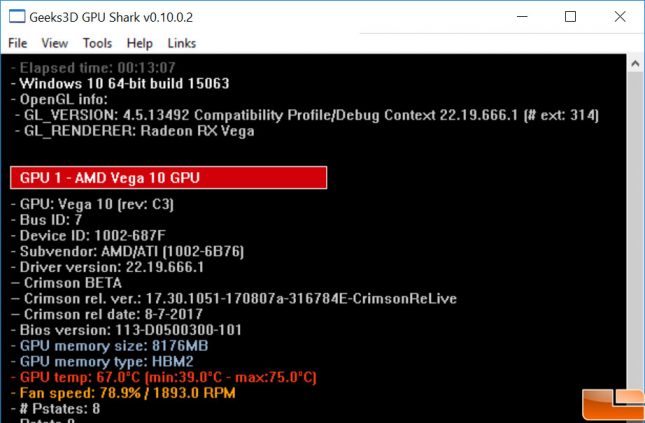

The gaming performance on a graphics card is the most important factor in buying a card, but you also need to be concerned about the noise, temperature and power consumption numbers. Since GPU-Z doesn’t yet read the temperatures of VEGA we didn’t have a way to record or log or temperatures. Using AMD Radeon Settings isn’t good for idle temperatures as it uses the GPU, so we ended up using GPU Shark to get some readings.

AMD Radeon RX VEGA 56 Temperatures:

The AMD Radeon RX Vega 56 reference card ran at 39C idle temps and hit 75C while gaming according to GPU Shark.

Gigabyte GeForce GTX 1070 G1 Gaming Temperatures:

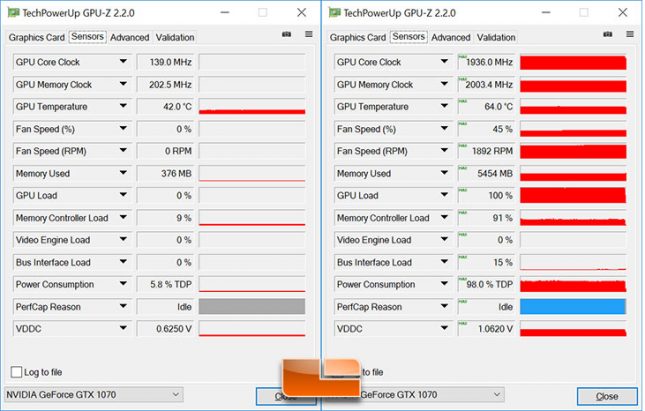

The Gigabyte GeForce GTX 1070 G1 Gaming has no fans running at idle and averaged 42C and after gaming for over half an hour our temperatures managed to top out at 64C.

Sound Testing

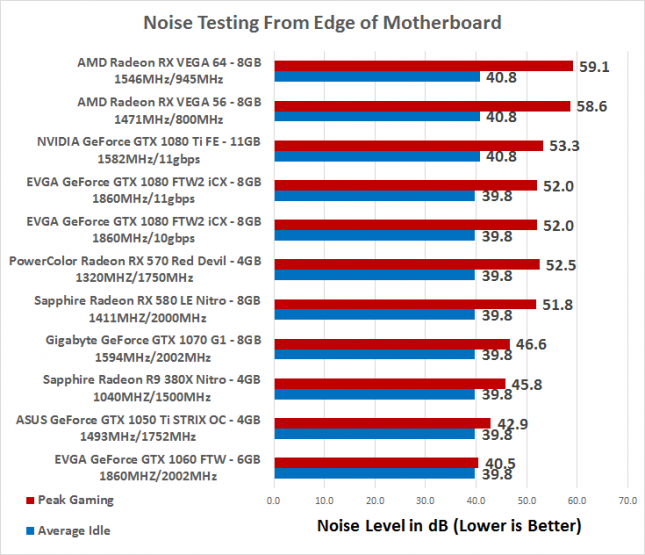

We test noise levels with an Extech sound level meter that has 1.5dB accuracy that meets Type 2 standards. This meter ranges from 35dB to 90dB on the low measurement range, which is perfect for us as our test room usually averages around 36dB. We measure the sound level two inches above the corner of the motherboard with ‘A’ frequency weighting. The microphone wind cover is used to make sure no wind is blowing across the microphone, which would seriously throw off the data.

When it comes to noise levels the Gigabyte GeForce GTX 1070 G1 Gaming with the WINDFORCE 3X cooler is the easy winner. This model has ‘fan stop’ for 0dB performance at idle and at load our meter on hit 46.6 dB. The AMD Radeon RX VEGA 56 has a blower style fan that always spins, so we were about 1dB higher at idle and 12 dB higher when gaming. The decibel scale is a logarithmic scale is used, rather than a linear one. Moving from 50 dB to 60 dB is a 10x increase in sound intensity, so this is a massive difference that one really needs to hear to believe.