AMD Mantle API Real World BF4 Benchmark Performance On Catalyst 14.1

AMD Mantle Real World Performance

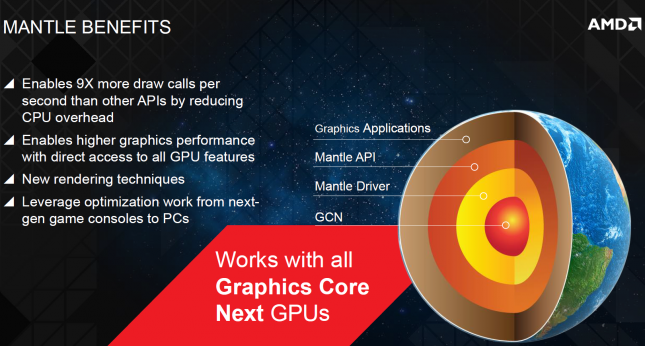

AMD has been talking about the potential performance increase that their new Mantle technology is going to provide, we have all seen the numbers that AMD and EA-DICE has been releasing. AMD has finally released CATALYST 14.1 beta drivers, so now we can do our own independent testing to check out performance with and without Mantle enabled.

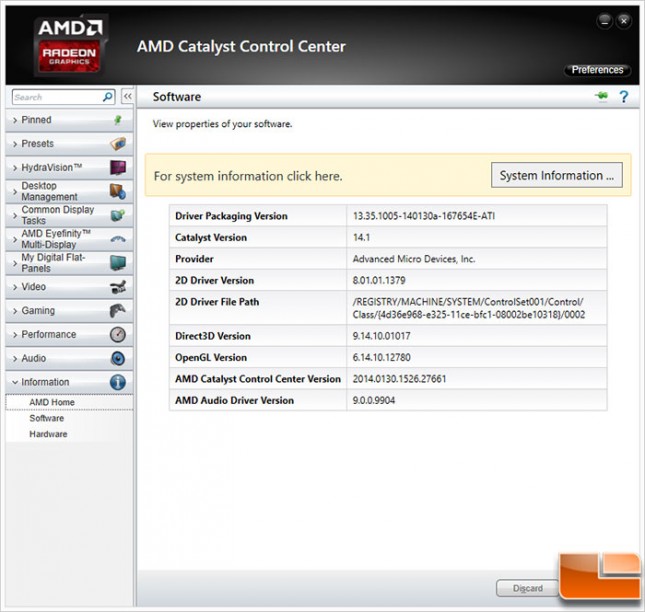

We were given early access to the Catalyst 14.1 beta driver, there are a few hoops to jump through during the installation process. We were able to do a successful install, but it looks like the installer is causing some issues for AMD. Here is a look at Catalyst Control Center that confirms we are now running Catalyst driver package 14.1.

Currently there are only two ways to test the AMD Mantle APU. You can use the latest build of the highly popular Battlefield game title or there is a new synthetic benchmark called StarSwarm that is now available to download and use. We tried both benchmark options out on what we consider a mainstream gaming platform (Intel Core i5-3570 ‘Ivy Bridge Process w/ a Sapphire Radeon R7 260X video card).

Let’s see if the numbers that have been released is pure marketing, or whether Mantle performs as well as we’re told it does.