AMD Ryzen 7 3700X and Ryzen 9 3900X CPU Review

AIXPRT – AI benchmark tool

For the very first time we will be using AIXPRT Community Preview 2, which is a pre-release build of AIXPRT. This is an AI benchmark tool that makes it easier to evaluate a system’s machine learning inference performance by running common image-classification and object-detection workloads.

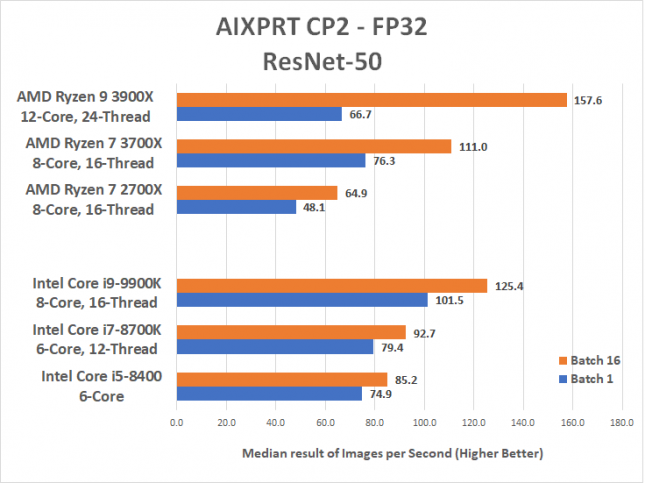

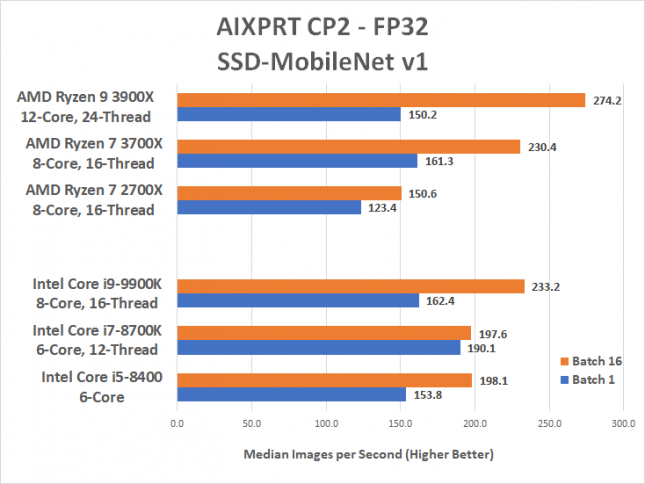

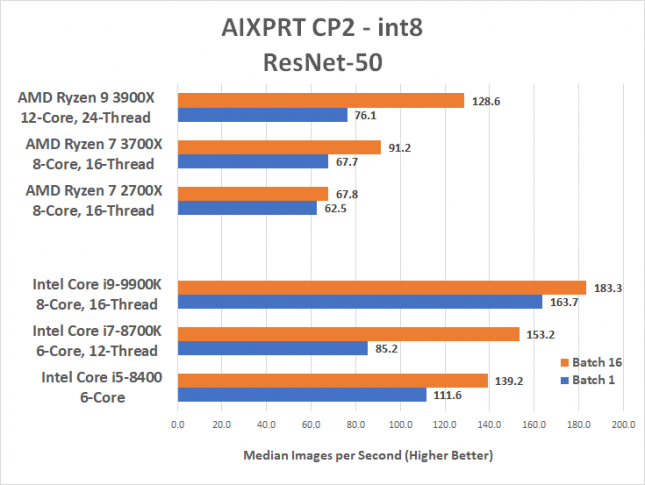

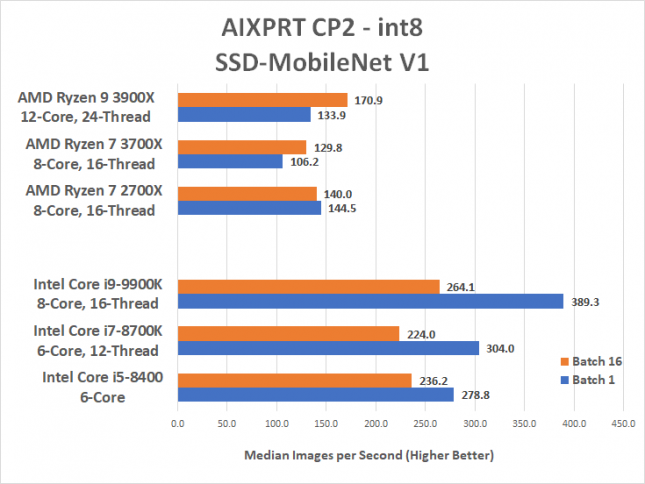

We used the Intel OpenVINO toolkit to run image-classification and object-detection workloads using the ResNet-50 and SSD-MobileNet v1 networks. We tested at FP32 and INT8 levels of precision on our Windows 10 v1903 test systems. The results from this test are shown in batch sizes of 1, 2, 4, 8, 16, and 32. We’ll be charting just the results for batch 1 and batch 16. If someone is looking for the single best inference latency in a client-side use case, batches as low as 1 are usually best. Those looking for maximum throughput on servers would likely be searching for concurrent inferences at larger batch sizes (typically 8, 16, 32, 64, 128). So, our readers will likely be most interested in batch 1 results.

Benchmark Results: We were seeing variances of up to 30% between runs, so we took the median result from 3 runs. Some of the results weren’t expected and we ended up running the test multiple times and the results were basically the same. We can’t explain why the 3900X would be slower than the 3700X in some of the batch 1 scenarios, but that is what the results were showing. This is a new benchmark tool that is still in development, but it gives you an idea of AI inference performance and we see that being a likely growing segment for desktop PC use in the years to come. Sadly, Deepfake tech seems to be all the buzz these days.