NVIDIA GeForce GTX 780 Ti vs AMD Radeon R9 290X at 4K Ultra HD

The Best Single-GPU Card For 4K Gaming

We often get asked by readers what the best video card is for gaming on a single 4K display or an NVIDIA Surround or AMD Eyefinity multi-display setup. We addressed this question in October with a look at the just released AMD Radeon R9 290X versus the NVIDIA GeForce GTX 780 on our Sharp PN-K321 32″ 4K Ultra HD Monitor at 3840 x 2160. A ton has changed over the past two months as both NVIDIA launched their new flagship card, the GeForce GTX 780 Ti, and both AMD and NVIDIA have released new drivers that help improve gaming performance.

4K displays are also continuing to come down in price as just this week Dell announced a pair of new 4K displays that are more affordable than ever. The new Dell UltraSharp 24 Ultra HD Monitor (UP2414Q) runs $1,399 and uses an IPS panel that is factory-tuned Adobe RGB (wide gamut) with more than acceptable viewing angles. That price point should be low enough to get more gamers playing at 4K! The Sharp PN-K321 4K LED-Backlit display that we have is getting pretty hard to find, but the ASUS PQ321Q has dropped to $3,323 shipped (Note that the mentioned Sharp and ASUS 31.5-inch 4K monitors are the same internally). Not everyone has $3,300 to spend on a monitor, but a good number of gamers are willing to pay around $1000 for a 4K monitor and we expect to see 24″ and 28″ 4K monitors with TN panels for $1000 or less in 2014.

For this comparison we have two new video cards that are going to be using. The NVIDIA GeForce GTX 780 Ti 3GB SC video card that we’ll be using is the EVGA GeForce GTX 780 Ti Superclocked w/ ACX Cooling that is sold under part number 03G-P4-2884-KR for $729.99 shipped or $719.99 shipped if you want the NVIDIA reference cooler. This card is factory overclocked and runs with a 1006MHz base clock, 1072MHz boost clock and 7000MHz on the 3GB of GDDR5 memory. This card comes with Assassins Creed IV Black Flag, Tom Clancys Splinter Cell Blacklist Deluxe Edition and Batman: Arkham Origins. EVGA did a wonderful job on this card and it makes for a perfect example of what one can expect from one of the best GeForce GTX 780 Ti cards on the market.

The AMD Radeon R9 290X has been out since October and we will be testing out a retail Sapphire Radeon R9 290X 4GB that came from Newegg. This card retails for $549.99 plus shipping, which is $180 less than the EVGA GeForce GTX 780 Ti SC. There are no custom AMD Radeon R9 290X cards on the market, so that means you are stuck with factory clock speeds and the reference GPU cooler if you wanted to go with the flagship single-GPU card from AMD right now. The clock speeds on the Sapphire Radeon R9 290X 4Gb are 1000MHz on the core and 5000MHz on the 4GB of GDDR5 memory.

The odd thing is that neither of these cards are in-stock at Amazon or Newegg right now and they have both been out for a number of weeks now. It is still tough to find some of these high-end cards!

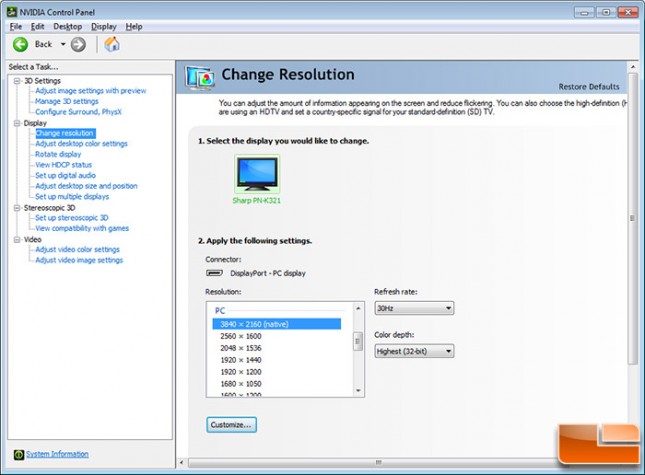

Both the Radeon R9 290X and the GeForce GTX 780 Ti had no issues being setup and run at 4K Ultra HD resolutions on our Windows 7 64-bit test system. Everything just worked right after the latest driver was installed and we were up and running at the monitors native resolution of 3840×2160.

So, the only thing let is to get to benchmarking! We’ll be skipping all the synthetic benchmarks for this review and will be running seven game titles and manually benchmarking each of them with FRAPs. We’ll be looking at minimum, average and maximum frame rates as well as looking at the first 90 seconds of each benchmark run charted out in its entirety. This is a little different than we normally benchmark video cards, but should give you a better look at what is going on in the game titles as we did experience some stutters, freezing and a ton of tearing.