NVIDIA GeForce GTX Titan X Video Card Review

The GeForce GTX Titan X is The Baddest GPU of The Land

Weve talked so much about the NVIDIA GeForce GTX Titan X video card lately that is feels odd to write an introduction for its official reveal, so well just jump right into it. The NVIDIA GeForce GTX Titan X uses the big GM200 Maxwell GPU like many thought and has 3072 CUDA cores clocked at 1000MHz for the base clock and 1075MHz on the boost clock. The incredible 12GB of GDDR5 memory being used for the frame buffer runs on a 384-bit memory bus and is clocked at 7010MHz. The end result is what NVIDIA is calling the Worlds fastest GPU and with specifications like that no other single-GPU card on the market will be able to touch this behemoth. Sure, the 12288MB of GDDR5 memory is overkill even in this day and age of 4K Ultra HD gaming, but for a flagship card who cares, right? The GeForce GTX Titan X was designed for gamers that desire the best card that NVIDIA has to offer regardless of the price to ensure they can game with the image quality settings cranked up no matter the resolution. NVIDIA calls these users ultra-enthusiast gamers and knows that they are not your typical price conscious consumers.

The NVIDIA GeForce GTX Titan X is priced at $999 and the card is being hard launched today, so if you are reading this on launch morning online retailers like Amazon, Newegg, TigerDirect, NCIX and others should be ready to take your money and to ship you out a GeForce GTX Titan X.

The NVIDIA GeForce GTX Titan X takes over as the flagship card from the GeForce GTX 980 that was introduced in September 2014. Over the past six months the GeForce GTX 980 has dominated the market by simply flat out overpowering the AMD Radeon R9 290X and having an impressive feature set. NVIDIA has done a good job at promoting the new features that were launched alongside with Maxwell like Voxel Global Illumination (VXGI, Multi-Frame Sample AA (MFAA), Dynamic Super Resolution (DSR) and DirectX 12 API with feature level 12.1 support. The NVIDIA GeForce GTX Titan X supports everything that the GeForce GTX 980 does with more power to handle emerging Virtual Reality (VR) applications. Consumer VR headsets will be coming to market later this year and youll need to have a GPU that can handle whatever is coming.

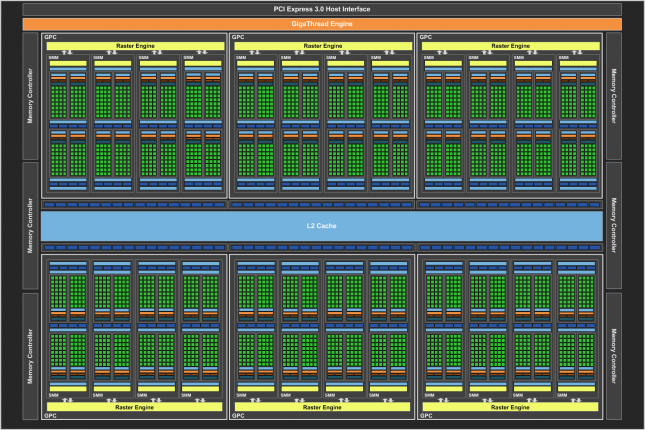

The GeForce GTX Titan X is powered by the full GM200 Maxwell GPU that has six Graphics Processing Clusters and a total of 24 Streaming Multiprocessor units (SMX). Each SMM contains 128 CUDA cores and that is how you end up with an impressive 3072 CUDA cores that handle the pixel, vertex and geometry shading workloads. The Maxwell GM200 uses the same basic SMX design as the other Maxwell GPUs that are already out. The 3072 CUDA cores in the Titan X’s GM200 GPU are clocked at 1000MHz/1075MHz. The texture filtering is done by 192 texture units that just happen to have a texture filtering rate of 192 Gigatexls/sec. This means that the GeForce GTX Tian X has the ability to do texture filtering 33% faster than the GeForce GTX 980! NVIDIA has been increasing the L2 cache size in recent years and they did so again on the GM200 as it has 3MB of L2 cache. The NVIDIA GK104 has just 512K and the GM204 used on the GeForce GTX 980 has 2MB. The display/video engines remain unchanged from the GM204 GPU used on the GeForce GTX 980 video cards.

NVIDIA wanted to create a graphics card that was designed for 4K gaming and wanted to basically max out the performance. NVIDIA opted to go with 12GB of GDDR5 memory that runs at 7010MHz on six 64-bit memory controllers (384-bit bus) to ensure that no one will run out of frame buffer when playing the latest game titles. This is good for 336.5GB/s of peak memory bandwidth, which is 50% more bandwidth than a GeForce GTX 980 has. You can spend all day playing game titles at 4K with ultra image quality settings only to find that none on the market today will use up 12GB of memory that are on the market today! Not to mention that even if you could find a game title that is able to use close to 12GB of memory the frame rates wont be high enough for the game to be actually playable! NVIDIA completely went overboard with the memory, but you wont hear many complaining.

| Titan X | GTX 980 | GTX 780 | GTX 680 | GTX 580 | |

| Microarchitecture | Maxwell | Maxwell | Kepler Refresh | Kepler | Fermi |

| Stream Processors | 3072 | 2048 | 2304 | 1536 | 512 |

| Texture Units | 192 | 128 | 192 | 128 | 64 |

| ROPs | 96 | 64 | 48 | 32 | 48 |

| Core Clock | 1000MHz | 1126MHz | 863MHz | 1006MHz | 772MHz |

| Shader Clock | N/A | N/A | N/A | N/A | 1544MHz |

| Boost Clock | 1075MHz | 1216MHz | 900MHz | 1058MHz | N/A |

| GDDR5 Memory Clock | 7,010MHz | 7,000MHz | 6,008MHz | 6,008MHz | 4,008MHz |

| Memory Bus Width | 384-bit | 256-bit | 384-bit | 256-bit | 384-bit |

| Frame Buffer | 12GB | 4GB | 3GB | 2GB | 1.5GB |

| FP64 | 1/32 FP32 | 1/32 FP32 | 1/24 FP32 | 1/24 FP32 | 1/8 FP32 |

| Memory Bandwidth | 336.5 | 224 | 288 | 192.3 | 192.4 |

| TFLOPS | 7 | 5 | 4 | 3 | 1.5 |

| GFLOPS/Watt | 28 | 30 | 15 | 15 | 6 |

| TDP | 250W | 165W | 250W | 195W | 244W |

| Transistor Count | 8.0B | 5.2B | 7.1B | 3.5B | 3B |

| Manufacturing Process | TSMC 28nm | TSMC 28nm | TSMC 28nm | TSMC 28nm | TSMC 40nm |

| Release Date | 03/2015 | 09/2014 | 05/2013 | 03/2012 | 11/2010 |

| Launch Price | $999 | $549 | $649 | $499 | $499 |

So, how does the GeForce GTX Titan X stack up to the the other flagship models that NVIDIA has released over the past five years? The NVIDIA GeForce GTX Titan X has is no slouch and the 7 TFLOPs of compute power and 336.6 GB/s of memory bandwidth dominate the cards of yesteryear. The original Kepler GPU powered Titan is not shown in the table above, but the Titan X is said to have 2x the performance and 2x the power efficiency of that part. It should be noted that the GeForce GTX Titan X has 7.0 TFLOPS of Single-precision floating-point performance and 0.2 TFLOPS of double-precision performance. If you want a card that can do double-precision tasks you’ll need to look to the Titan Z that is still available for just that.

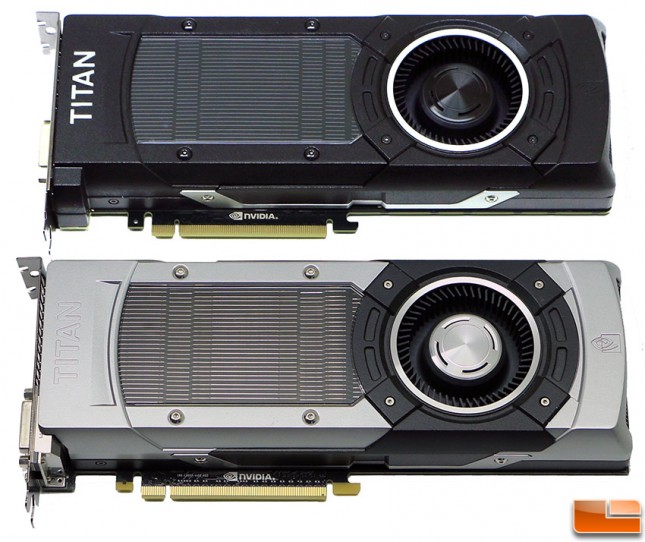

Let’s move along and take a look at the GeForce GTX Titan X reference card!