NVIDIA GeForce GTX 780 Ti Specs Leaked Already?

Last week AMD tried to crash NVIDIA’s press event by showing how their upcoming Radeon R9 290X video card was able to best the NVIDIA GeForce GTX 780 on 4K monitor on a pair of game titles. NVIDIA was able to one up that by announcing the GeForce GTX 780 Ti video card would be coming in November and become the companies flagship new video card. It sounds like this card could be able to trump AMD’s Radeon R9 290X, but no details were given at the event on the cards hardware specifications. It is safe to assume that the GeForce GTX 780 Ti will be using the GK110 ‘Kepler’ GPU and will need to fill the rather small performance gap between the GeForce GTX Titan and the GeForce GTX 780.

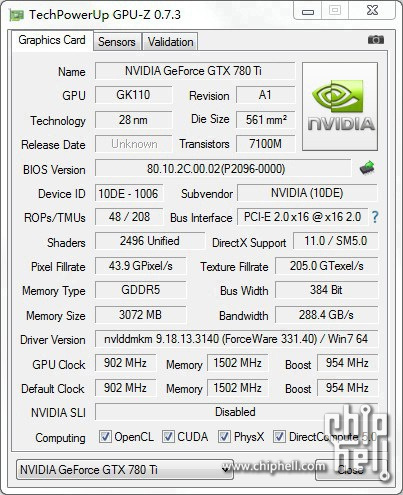

We’ve been hearing rumors about a GeForce GTX Titan Lite that would have 2496 CUDA cores and 5GB of GDDR5 since March 2013, so could this be the GeForce GTX 780 Ti?

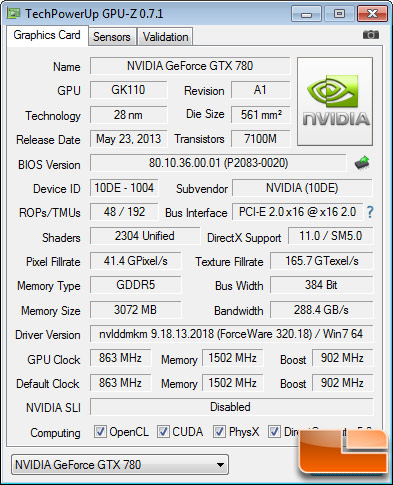

The current NVIDIA GeForce GTX 780 has 2304 CUDA cores (stream processors) with 192 texture units and 28 ROPs. The NVIDIA GeForce GTX 780 reference card (shown above) has a core clock speed of 863MHz (902MHz Boost) and 3GB of GDDR5 memory running at 1502MHz (6008MHz effective).

According to the leaked GPU-Z shot, the GeForce GTX 780 Ti features the GK110 GPU and has 2496 CUDA Cores, 208 TMUs and 48 ROPs. It also has a core clock speed of 902MHz (954MHz boost) and 3GB of GDDR5 memory at 1502MHz (6008MHz effective). GPU-Z details show the memory bandwidth remaining at 288.4 GB/s, but the Pixel Fillrate went up from 41.4 GPixels/s to 43.9 GPixels/s and the Texture Fillrate went from 165.7 GTexel/s to 205.0 GTexel/s! This is a 6% increase on the Pixel Fillrate and a massive 23.7% increase on the Texture fillrate. This image could be totally fake, but it also could be real.

AMD and NVIDIA are locked in a pretty fierce battle over these high-end cards and we can’t wait to see what the real specs are and who comes out on top! We talked about how the move to 4K is being heavily marketed by both AMD and NVIDIA last week and the AMD Radeon R9 290X and the NVIDIA GeForce GTX 780 Ti appear to be the cards to have for a single-GPU 4K setup.