NVIDIA GeForce GTS 450 SLI versus ATI Radeon HD 5770 CrossFire

Temperature Testing

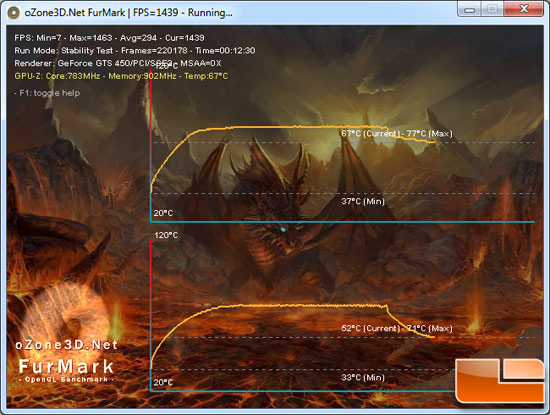

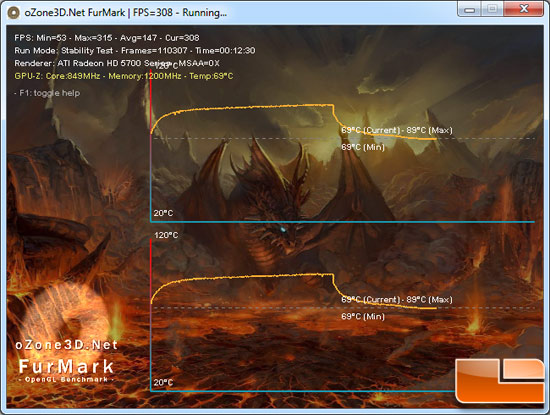

Since video card temperatures and the heat generated by next-generation cards have become an area of concern among enthusiasts and gamers, we want to take a closer look at how the SLI and CrossFire setups do at idle and under a full load. We fired up FurMark and ran the stability at 640×480, which was enough

to put both of the GPUs at 100% load in order to get the highest load

temperature possible. This application also charts the temperature

results so you can see how the temperature rises and levels off, which

is very nice.

NVIDIA GeForce GTS 450 SLI:

NVIDIA SLI Results: The NVIDIA GeForce GTS 450 SLI setup idles at 34C on the outside card that is getting the fresh air and then 38C on the inside card that is sucking in some of the warm air off the card sitting behind it. At full load for 10 minutes running the extreme burn-in test in FurMark 1.8.2 we found that the outside card got up to 71C while the inside card went up to 77C due to the lack of fresh air. As you can also see from the chart above the inside card cools down much slower than the outside card.

ATI Radeon HD 5770 CrossFire:

The winner of the temperature test is clearly NVIDIA with much lower idle and load temperatures. Since both systems consume roughly the same amount of power we can conclude that the NVIDIA GPU cooler is dissipating the heat better than the ATI solution.

Comments are closed.