Redshift v3.0.22 Benchmarks With Hardware-Accelerated GPU Scheduling

The last time Legit Reviews took a closer look of Redshift’s fully GPU-accelerated biased renderer for NVIDIA GeForce graphics was back in the fall of 2018. The NVIDIA GeForce RTX 2000 series graphics cards had just launched and we wanted to look at GPU performance in Redshift 2.6.+

Since that article was published, Redshift Rendering Technologies was acquired by Maxon in 2019 and they have rolled out Redshift 3.0. Redshift 3.0 is a major update as it supports support for hardware-accelerated ray tracing via NVIDIAs new RTX GPU architecture. NVIDIA has also released a slew of new GeForce RTX 2000 series and GeForce 1600 series cards since we last gave Redshift 2.6 a try.

Today we are armed with a Redshift 3.0 license and will be using the built-in benchmark scene to test nearly all of the current GeForce GTX and RTX offerings from NVIDIA. We’ll be starting with the NVIDIA GeForce GTX 1650 4GB graphics card that runs $149 and end with the NVIDIA GeForce RTX 2080 Ti 11GB that is priced at $1,199. We also included the NVIDIA GeForce GTX 1080 Ti and GeForce GTX 1070 as reference points for those that might not be running one of the newest GeForce models. In total, 13 cards were tested with 8 being Founders Edition and the remaining 5 being from add-in-board partners ASUS and EVGA.

Since we built a brand new AMD B550 based system powered by the AMD Ryzen 9 3950X processor just to benchmark the latest versions of Redshift we had a clean install of Windows 10 64-bit May 2020 (v2004) at our disposal. This gave us the opportunity to also look at how the new “hardware-accelerated GPU scheduling” feature introduced by Microsoft with the May Windows 10 update works with the latest NVIDIA GeForce drivers.

When hardware-accelerated GPU scheduling is enabled in Windows 10 (you have to enable it manually and reboot) the CPU-GPU latency theoretically should be reduced. In applications like Redshift that means you should end up with faster rendering times! So, we’ll be taking a look at the time it takes to render the scene with the GPU in seconds as well as the CPU-GPU latency in milliseconds.

| The AMD B550 Test Platform | |||||

|---|---|---|---|---|---|

|

Component |

Brand/Model |

Live Pricing |

|||

| Processor | AMD Ryzen 9 3950X | ||||

| Motherboard |

ASUS ROG B550-E Gaming

|

||||

| Memory |

32GB Corsair 3600MHz DDR4

|

||||

| NVIDIA GPU | GeForce RTX 2080 Ti GeForce RTX 2080 SUPER GeForce RTX 2080 GeForce RTX 2070 SUPER GeForce RTX 2070 GeForce RTX 2060 SUPER GeForce RTX 2060 GeForce RTX 2060 GeForce GTX 1660 Ti GeForce GTX 1660 SUPER GeForce GTX 1660 GeForce GTX 1650 GeForce GTX 1080 Ti GeForce GTX 1070 |

||||

| Solid-State Drive | Corsair Force MP600 1TB | ||||

| Cooling | Corsair Hydro H115i Pro RGB | ||||

| Power Supply | Corsair RM1000x | ||||

| Case | Corsair 680X RGB | ||||

| Operating System | Windows 10 64-bit | ||||

| Monitor | Acer Predator X27 4K HDR | ||||

Once Windows 10 Pro 64-bit May 2020 Update was installed we enabled D.O.C.P. in the UEFI for the memory to run at 3600MHz and that was it. The ASUS B550-E Gaming motherboard was running UEFI 0805 that just came out on July 3rd, 2020 with AMD AGESA Combo-AM4 v2 1.0.0.2. All of the NVIDIA GeForce graphics cards used GeForce 451.48 WHQL Game Ready Drivers from June 2020.

The benchmarking was completed using the built-in benchmark script that downloads the Age of Vultures scene created by Toni Bratincevic (Intercepto). Toni just happens to be a principal digital matte artist at Blizzard Entertainment, which is pretty neat. This scene was picked from a contest that Redshift ran for three months back in 2016 and introduced in Redshift 2.5. We ran the scene three times with and without hardware-accelerated GPU scheduling enabled.

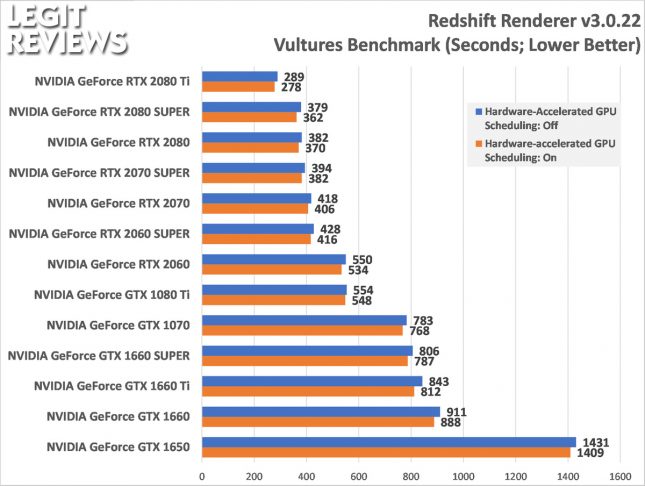

Rendering performance scales pretty nicely over the different models and of course the NVIDIA GeForce RTX 2080 Ti comes out on top with a completion time of 289 seconds straight out of the box. If you enable hardware-accelerated GPU scheduling in Windows that time drops down 278 seconds, which is a time savings of 11 seconds. The average improvement was shown to be just under 3% for all the models with the smallest gain being just 1.5% and the largest being 4.5%.

The only card that performed unexpectedly at first glance was the GeForce GTX 1660 SUPER finishing the render faster than the GeForce GTX 1660 Ti. That is likely due to the fact that the 1660 Ti uses GDDR6 memory rated at 288.1 GB/s and the 1660 SUPER uses GDDR6 memory rated at 336 GB/s. Other than that, the results are pretty straight forward and make sense!

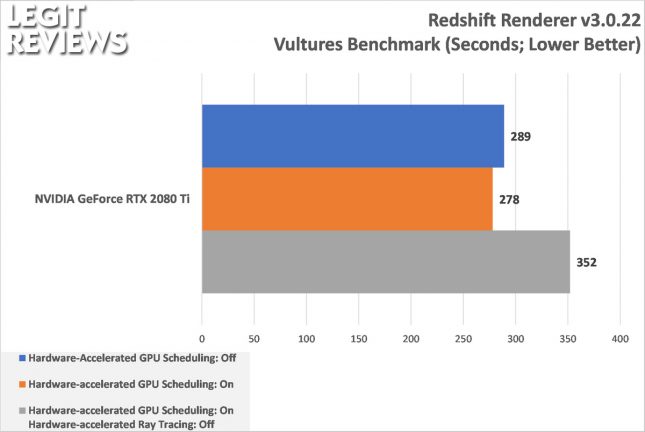

Just for fun we disabled the new feature in Redshift 3.0 that allows RT core support, so you’ll be able to do hardware-accelerated ray tracing on NVIDIAs latest RTX architecture. We spot checked this feature on the NVIDIA GeForce RTX 2080 Ti and went from 278 seconds to 352 seconds by disabling that functionality! So, Redshift 3.0 is able to speed up the render by 74 seconds by fully utilizing NVIDIA’s RT cores in their latest RTX GPU architecture!

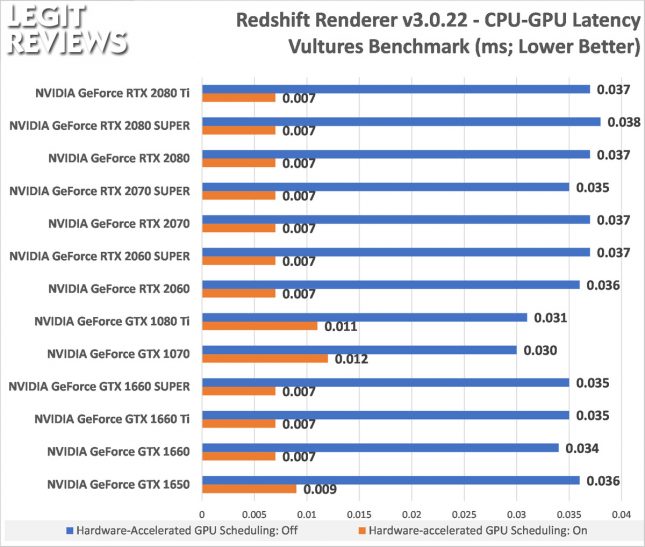

When we take a look at the CPU-GPU latency we generally saw between 0.034ms to 0.038ms with the current generation ‘Turing’ architecture based GPUs and about 0.030ms to 0.031ms on last generation ‘Pascal’ architecture based GPUs. Now would be a great time to point out that these results are done with WDDM. NVIDIA GPUs can exist in one of two modes: TCC or WDDM. WDDM (Windows Display Driver Model) is the most common mode for GeForce graphics cards whereas TCC (Tesla Compute Cluster) is more common on Tesla and Quadro cards where gaming graphics aren’t a concern.

With hardware-accelerated GPU scheduling enabled the GPU latency drops from 0.03ms-0.04ms down to just 0.007ms! We are seeing an 80% reduction in CPU-GPU latency on current generation cards! This means that certain “stalls” inside Redshift (for example: when the CPU is waiting for the GPU to tell it it’s “done”) are shortened and that helps improve the rendering time of a scene.

If you have any questions, please let us know in the comments below. Hopefully you enjoyed our look at Redshift 3.0 performance on a variety of graphics cards!