Top Game Developers Answer – 4K or NVIDIA G-Sync Monitor?

Are you a gamer and thinking about updating your monitor? Ultra HD 4K monitors look amazing, but they cost a small fortune. For example the ASUS PQ321Q 4K Ultra HD display runs $3499 and that is far more than most are willing to pay. NVIDIA today announced G-Sync and this new technology brings very apparent improvements to those with GeForce GTX video cards by synchronizing the monitor to the output of the GPU, instead of the GPU to the monitor, resulting in a tear-free, faster, smoother experience that redefines gaming.

NVIDIA already has industry support from monitor makers and has ASUS, BenQ, Philips and ViewSonic already signed up to produce G-Sync enabled monitors. ASUS plans to release their G-SYNC-enhanced VG248QE gaming monitor in the first half of 2014 and has the price set to $399. The non G-Sync version is currently available for $279.99, so the price delta between the non-G-SYNC models and G-SYNC model appears to be about $129. So, that leads to the question of what monitors will gamers be looking towards most?

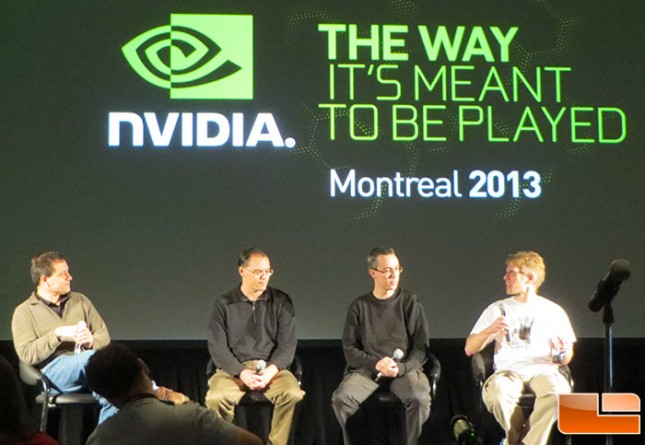

NVIDIA was able to John Carmack, Johan Andersson, and Tim Sweeney to take questions and they were asked if they would rather have a monitor with 4K or G-Sync. John Carmack, co-founder of id Software, was the first to answer and he quickly said NVIDIA G-Sync and the others didn’t disagree. NVIDIA G-Sync monitors are certainly more affordable, but keep in mind G-Sync can be used in a 4K panel!