AMD Mantle & TrueAudio Patch for THIEF Tested

AMD Mantle & TrueAudio Patch for THIEF

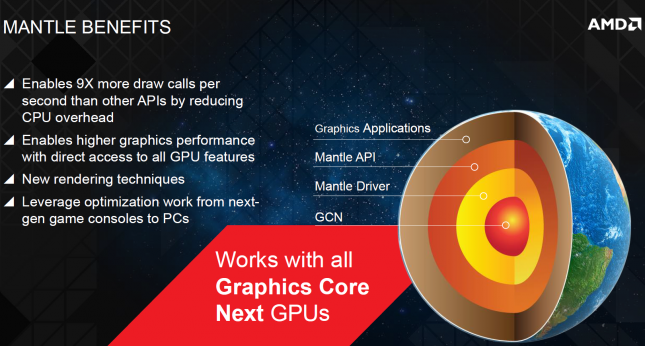

Mantle & TrueAudio are a pair of new features used by AMD on select Radeon R7 and R9 video cards that are Graphics Core Next (GCN) compatible. Mantle is a new graphics API that AMD says provides a performance increase in games that are Mantle enabled.

Battlefield 4 was the first game to support Mantle, and Legit Reviews found that Mantle did provide a significant performance increase, with just a driver update and a patch by EA-DICE. As it is a new unproven technology, it is an interesting technology to keep our eyes on while it matures.

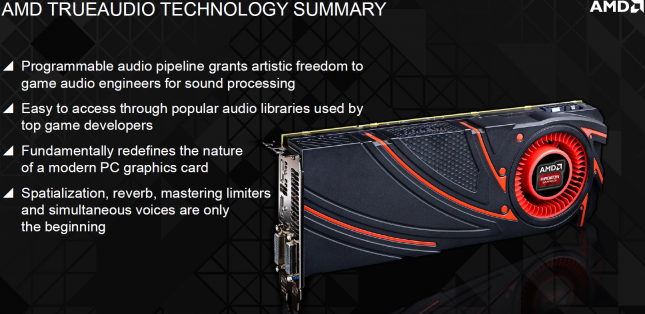

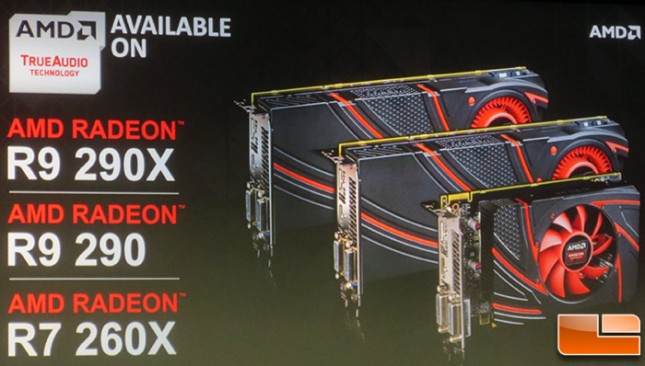

AMD’s TrueAudio technology is a feature on AMD HD 7790, R7 260, and R290 series graphics cards. These cards include a dedicated audio processor, which allows the audio processing to be offloaded from the CPU. This processor processes audio compression and filtering, speech processing and recognition, simulating audio environments and creating 3D sound effects. Other than needing one of the Radeon graphic cards that supports Mantle and TrueAudio, no additional hardware is necessary. TrueAudio will work with your existing audio solution, the software developers will implement TrueAudio allowing the processing to be switched from the CPU to the Xtensa audio cores.

Other than needing one of the Radeon graphic cards that supports Mantle and TrueAudio, no additional hardware is necessary. TrueAudio will work with your existing audio solution, the software developers will implement TrueAudio allowing the processing to be switched from the CPU to the Xtensa audio cores.

While Battlefield 4 was the first game to support Mantle, Thief by Square Enix is the first game to support both Mantle and TrueAudio. TrueAudio is implemented to enhance environmental sound effects. This was done by taking a snapshot of the echo characteristics of a real-world location and importing it into software to be translated into how the sound should react within the games environments.

Let’s take a look at how Mantle affects the performance of Thief, and whether TrueAudio can enhance the games audio.