Printing A 3D Battery Smaller Than 1mm Becomes Reality

At just a millimeter in width, a new lithium-ion battery built by a Harvard University and University of Illinois team is perfectly suited to be a power source for tiny robots, computers and even medial devices. It is also the first battery to ever be fabricated with a 3D printer. The team published their work Tuesday in Advanced Materials (subscription required).

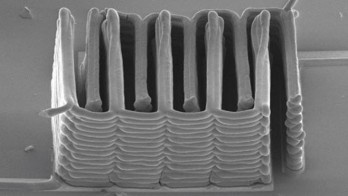

The team used a custom printer and ink to produce the batteries. A nozzle .03 millimeters–or 30 microns–wide deposited layers of nanoparticle-packed paste in a comb-like shape. A second printed comb nestled into the first, their teeth interlocked. These functioned as the two halves of electrodes, which conduct electricity. After waiting for the printed material to harden, you have a battery that is made on a scale unlike anything the world has ever seen. Think about what this will mean for commonly used items like hearing aids, wearable technology, robotic insects and anything that uses a battery. Heck, we might be able to have batteries flowing in our bodies if there was ever a reason to do such a thing. This is a very cool use of 3D printers and it just goes to show you that both 3D printing and smaller batteries will both open the door to new innovations this decade and beyond.

Comments are closed.