NVIDIA GeForce GTX 750 Ti Maxwell Performance Numbers Leaked?

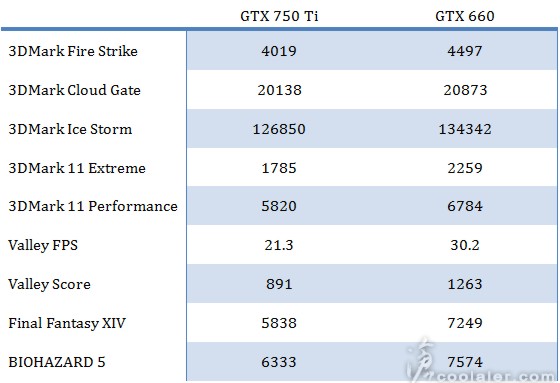

Last week we told you that numerous sites were reporting that the NVIDIA GeForce GTX 750 Ti would be powered by the first Maxwell GPU and that it would be released in February 2014. According to SweClockers, the NVIDIA GTX 750 Ti graphics card is expected to be released on February 18th. Today on Coolaler.com there are some benchmark numbers that have been posted up of what they say is the GeForce GTX 750 Ti. Soothepain on Coolaler writes that the benchmark numbers came from a reliable source, but usually when it is this far out the numbers are totally fake. Add to that this is a new GPU architecture and if someone really has a card, they are using extremely early beta drivers. So, knowing that these numbers are likely false, they show the NVIDIA GeForce GTX 750 Ti performing about 10 to 15% slower than the GeForce GTX 660. Does that shock anyone?

It will be interesting to look back at these numbers in a month to see if they were entirely made up or what.