NVIDIA G-Sync Demonstrated on 4K Monitor For The First Time At CES 2014

NVIDIA had a large presence at CES 2014 and we were particularly interested in what they had on display when it came to gaming at 4K Ultra HD resolutions.

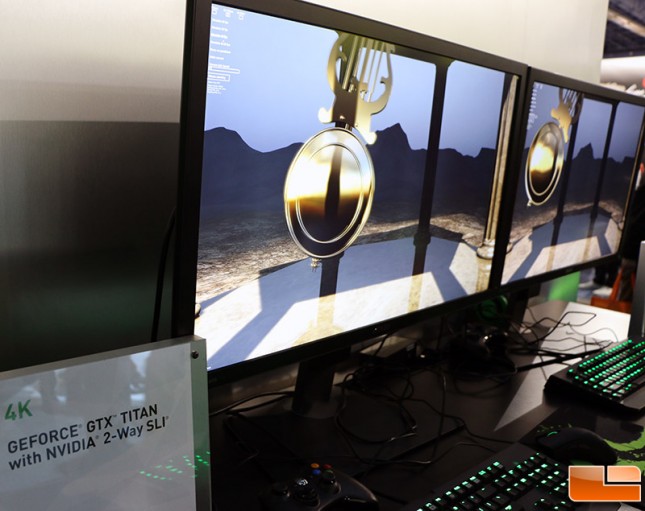

NVIDIA also custom modded an ASUS PQ321Q 4K Monitor with NVIDIA G-Sync to show off how they can make Ultra HD Gaming (3840×2160) look incredible! We’ve seen plenty of demos with NVIDIA G-Sync running on the ASUS VG248QE at 1080P, but this really takes it to the next level! If you own the ASUS PQ321Q don’t get too excited though as neither NVIDIA nor ASUS have plans on offering an upgrade kit for this model, but there are 4K NVIDIA G-Sync monitors in the works. The demo consisted of a pair of systems running NVIDIA GeForce GTX Titan 6GB video cards running in 2-way SLI with one monitor G-Sync enabled and the other not. There was a clear difference in the smoothness of the monitor and this is the first time that we were able to play a real game on a 4K monitor with the image quality cranked up and not seeing any ripping or tearing!

NVIDIA’s Tom Petersen offered to give Legit Reviews an overview and explanation of NVIDIA G-Sync and the 4K demo that they made just for CES 2014. You can watch that clip above!