Intel Outlines New Xeon Mesh Architecture for Data Centers

Intel is offering up some details on its new “mesh” on-chip interconnect that is the future of the data center. The details on the new architecture come ahead of the launch of the new Intel Xeon Scalable Processor platform, something Intel is calling its biggest data center platform advancement of the decade. Intel CPU architect Akhilesh Kumar is sharing his perspective on the new architecture.

Kumar says that processors play a fundamental role in data center optimization and the processor architecture can have an impact on choices for scalability and efficiency in the data center. There are challenges with adding more cores and interconnecting them in a multi-core processor aimed at data center use. Kumar says that the interconnects between CPU cores, memory hierarchy, and I/O subsystems provide the pathways needed to connect the internal components of the processors. The interconnects are described like a well-designed highway that has the perfect number of lanes and ramps at the places they need to be.

Challenges included being able to to increase the bandwidth between cores, the on-chip cache hierarchy, memory controller, and I/O controllers. The interconnect bandwidth has to scale properly to prevent a bottleneck that limits the system efficiency. Designers also had to reduce latency when accessing data from on-chip cache, main memory, or other cores. The other big challenge was creating energy efficient ways to supply data to cores and I/O from on-chip cache and memory.

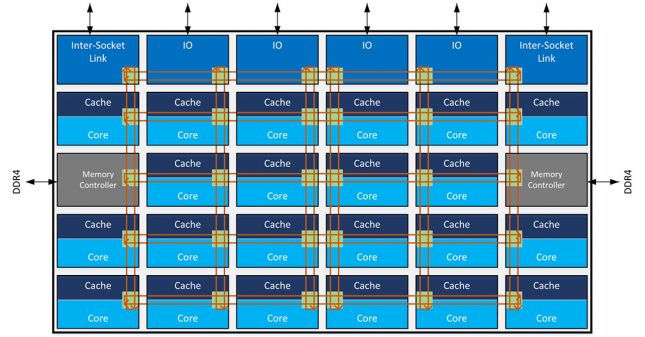

The new Intel Xeon Scalable processors implement a “mesh” on-chip interconnect topology that is able to provide the low latency and high bandwidth needed between the cores, memory, and I/O controllers required to meet the challenges outlined previously. The image with all the blue squares shows a representation of the mesh architecture Intel is using.

The architecture makes uses of a mesh that connects cores, on-chip cache banks, memory controllers and I/O controllers that are in rows and columns with wires and switches connecting them at intersections to allow for turns. The new mesh architecture has a more direct path than the prior ring architectures and more pathways to help eliminate bottlenecks. The mesh is able to operate at lower frequency and voltage while still giving high bandwidth and low latency. That means more performance and better energy efficiency.

Intel’s new Xeon Scalable processors have a modular architecture with scalable resources for on-chip cache, memory, IO, and remote CPUs. These resources are distributed throughout the chip to minimize hot spots and other subsystem resource constraints. This allows available resources to scale as the number of processor cores increase. Since the latency differences from accessing different cache banks in the architecture are negligible, the software on the system is able to access different cache banks and treat it as one large, unified cache bank.

Intel says that the new architecture will provide performance and efficiency improvements across a broad range of usage scenarios and provide a foundation for future improvements for data center users.