Ashes of the Singularity DirectX 12 vs DirectX 11 Benchmark Performance

Ashes of the Singularity DirectX 12 vs DirectX 11 Gaming

Today we’ll be looking at one of the very first DirectX 12 game benchmarks by using Stardock’s real-time strategy game, Ashes of the Singularity. Ashes of the Singularity was developed with Oxides Nitrous game engine and tells the story of an existential war waged on an unprecedented scale across the galaxy. The Ashes of the Singularity Benchmark was never designed as a synthetic stress test, but a real world test that was used internally to measure overall system performance. That internal developer tool was released to the public today as a DX12 benchmark!

Here is what the developers had to say about the Ashes of the Singularity benchmark:

The benchmark is representative of real world gameplay. That means that while the strategic AI is scripted, everything else is being simulated just like it would be in game, such as tactical AI, physics, pathfinding, targeting, visibility, etc. To that extent, there will be more variability in the results than a synthetic benchmark would have. What we have noted however, is that performance has been consistent and several runs can be used to generate an accurate representation of performance. Even though we have been working with Microsoft on DirectX 12 for a while now, DirectX 12 and Windows 10 have only recently released to public. As such, we expect that the DirectX 12 results will only improve from here on out. We along with AMD, Microsoft, and Nvidia will continue to make improvements. With that in mind, this version should be treated as more of a performance preview to celebrate our excitement for Windows 10 and DirectX 12, as opposed to a hardcore synthetic test

Ashes of the Singularity Benchmark Technical Features:

- Multi-Threaded Performance: Compared to DirectX 11, which used single-threaded and multithreaded performance, Ashes of the Singularity will see huge gains in complex workloads. This will allow for significantly improved balance of workload between CPU cores.

- (Coming Soon) Explicit Multi-GPU Support: With DirectX 12 comes the ability to use multiple GPUs. This allows for better control of multi-GPU rendering by developer, thus controlling the frame. Without this ability, the integrated GPU would remain idle and unused as an untapped resource to increase rendering and speed up the frame.

- Asynchronous Shaders: This allows the schedule of work for the GPU that will be performed. Traditionally, the GPUs command queue would have had stalls, and DirectX 12 essentially provides more work done for free.

- Explicit Frame Management: DirectX 12 will see a reduction in latency, which will provide a more responsive game experience. This will also allow tracking of specific information, such as whether something is GPU or CPU bound.

- Updated Memory Management Design: A radical change in the memory management design allows developers to remove traditional performance issues such as micro-stutters that have plagued D3D11.

- DirectX 12 has taken great strides to prevent “screen tearing” by defaulting to having images rendered at the screen’s refresh rate (which would result in frame rate limiting). However, for benchmark purposes, we have taken steps to bypass this to give a more accurate representation of potential performance.

- MSAA is implemented differently on DirectX 12 than DirectX 11. Because it is so new, it has not been optimized yet by us or by the graphics vendors. During benchmarking, we recommend disabling MSAA until we (Oxide/Nidia/AMD/Microsoft) have had more time to assess best use cases.

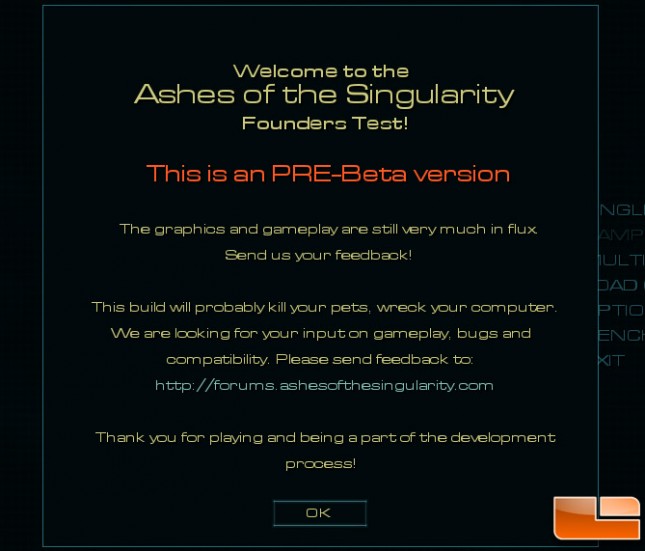

When you launch the Ashes of the Singularity Benchmark you are greeted with this screen that clearly notes that this is a pre-Beta build that “will probably kill your pets, wreck your computer.”

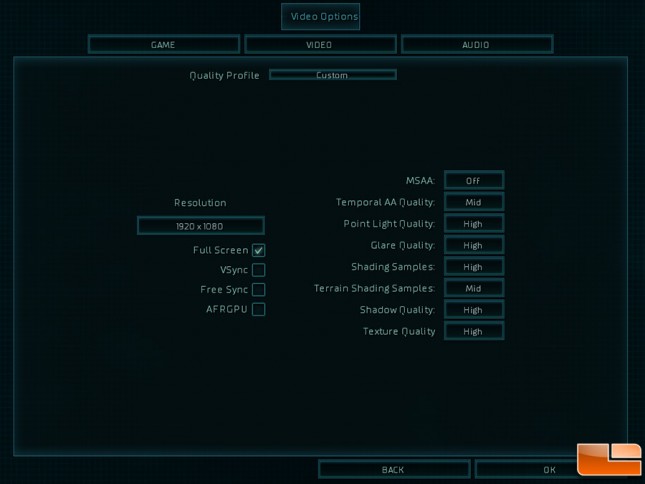

We disabled MSAA since there are known issues with having that enabled as well as VSync. We left the Temporal AA Quality and Terrain Shading Samples on ‘Mid’ and the other image quality settings at their default value of ‘High’. Since we are using an EVGA GeForce GTX 960 SSC 4GB video for testing and set the screen resolution at 1920 x 1080. This card was selected due to it being $229.99 shipped as it is what we would consider a mainstream video card that is popular right now.

Let’s check out the results on the next page!