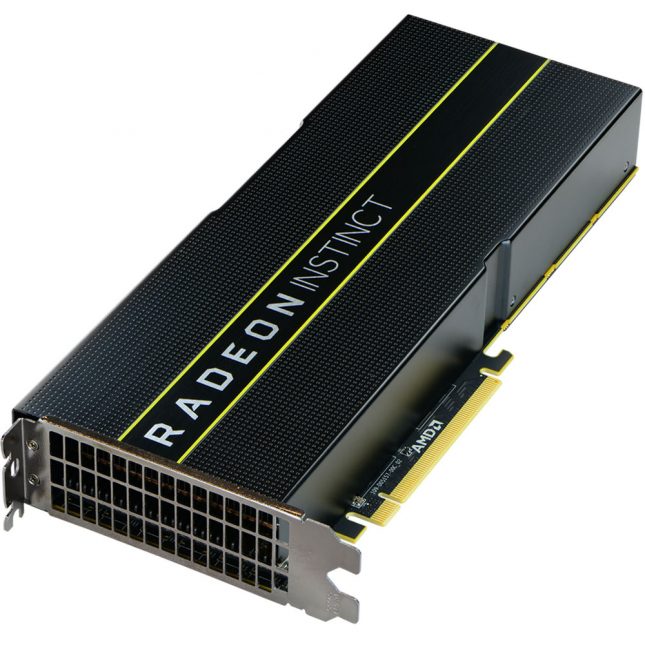

AMD Radeon Instinct MI25 Leads New Class of Deep Learning Accelerators

AMD has officially unveiled the new Radeon Instinct family accelerators for deep learning datacenter applications this week in Austin, Texas. The flagship model that is getting the most attention is called the Radeon Instinct MI25. The Radeon Instinct MI25 features the latest VEGA 10 GPU with 4,096 Stream Processors along with 16GB of HBM2 memory based on a dual-slot card with a 300W TDP power rating. Despite being a 300W TPD card the designers found a way to make this a passively-cooled single GPU card!

AMD Radeon Instinct MI25 Deep Learning Accelerator Specifications:

- Vega 10 Architecture built on 14nm FinFET process

- 64 Next-Gen Compute Units

- 4096 Stream Processors

- 24.6 TFLOPS Peak Half Precision (FP16)

- 12.3 TFLOPS Peak Single Precision (FP32)

- 768 GFLOPS Double Precision (FP64)

- 16GB HBM2 High Bandwdith Cache (Memory)

- 484GB/sec Memory Bandwidth

- 300W TDP

- PCIe Form Factor

- Full Height Dual Slot

- Passive Cooling

AMD also offers two other models, Radeon Instinct MI16 & Radeon Instinct MI8, that use the older ‘Fiji’ and ‘Polaris’ GPU architectures for those that don’t need something so powerful.

| Compute Units | TFLOPS | Memory Size | Memory Bandwidth | |

|---|---|---|---|---|

| Radeon Instinct MI25 | 64

4096 Stream Processors

|

24.6/12.3

FP16 / FP32 Performance

|

16 GB

HBM2

|

484 GB/s |

| Radeon Instinct MI8 | 64

4096 Stream Processors

|

8.2

FP16 and FP32 Performance

|

4 GB

HBM1

|

512 GB/s |

| Radeon Instinct MI6 | 36

2304 Stream Processors

|

5.7

FP16 and FP32 Performance

|

16 GB

GDDR5

|

224 GB/s |

Here are the details of the three accelerator cards from the press release that AMD sent out yesterday afternoon.

- The Radeon Instinct MI25 accelerator, based on the Vega GPU architecture with a 14nm FinFET process, will be the worlds ultimate training accelerator for large-scale machine intelligence and deep learning datacenter applications. The MI25 delivers superior FP16 and FP32 performance in a passively-cooled single GPU server card with 24.6 TFLOPS of FP16 or 12.3 TFLOPS of FP32 peak performance through its 64 compute units (4,096 stream processors). With 16GB of ultra-high bandwidth HBM2 ECC GPU memory and up to 484 GB/s of memory bandwidth, the Radeon Instinct MI25s design is optimized for massively parallel applications with large datasets for Machine Intelligence and HPC-class system workloads.

- The Radeon Instinct MI8 accelerator, harnessing the high-performance, energy-efficiency of the Fiji GPU architecture, is a small form factor HPC and inference accelerator with 8.2 TFLOPS of peak FP16|FP32 performance at less than 175W board power and 4GB of High-Bandwidth Memory (HBM) on a 512-bit memory interface. The MI8 is well suited for machine learning inference and HPC applications.

- The Radeon Instinct MI6 acceleratorRadeon Instinct MI6 accelerator, based on the acclaimed Polaris GPU architecture, is a passively cooled inference accelerator with 5.7 TFLOPS of peak FP16|FP32 performance at 150W peak board power and 16GB of ultra-fast GDDR5 GPU memory on a 256-bit memory interface. The MI6 is a versatile accelerator ideal for HPC and machine learning inference and edge-training deployments.