NVIDIA GeForce GTX 280 Graphics Cards by EVGA and PNY

Power Consumption and Final Thoughts

Power Consumption

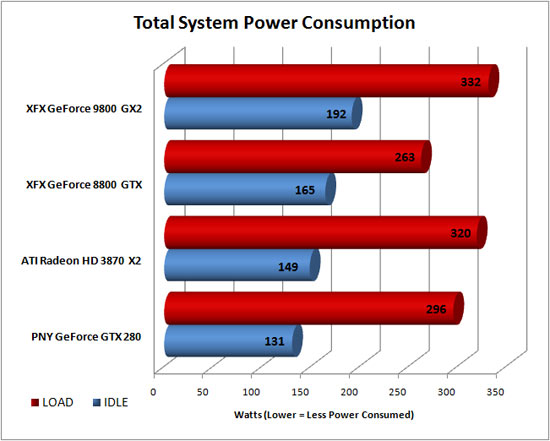

For testing power consumption, we took our test system and plugged it into a Seasonic Power Angel. For idle numbers, we allowed the system to idle on the desktop for 15 minutes and took the reading. For load numbers, we measured the peak wattage used by the system while running the game Call of Duty 4 at 1280×1024 with high graphics quality.

Power Consumption Results: Looking at total system power consumption levels, the PNY GeForce GTX 280 fits in great compared to the other cards, consuming a respectable amount of power. At idle the GeForce GTX 280 actually consumed the least amount of power! This is thanks in part to the fact that the GeForce GTX 200 GPUs include a more dynamic and flexible power management architecture than past generation NVIDIA GPUs. Four different performance / power modes are employed on the GTX 200 series:

-

Idle/2D power mode (approx 25 W)

-

Blu-ray DVD playback mode (approx 35 W)

-

Full 3D performance mode (variesworst case TDP 236 W)

-

HybridPower mode (effectively 0 W)

The new GTX 200 series of video cards have utilization monitors (digital watchdogs) that constantly check the amount of traffic occurring inside of the GPU. Based on the level of utilization reported by these monitors, the GPU driver can dynamically set the appropriate performance mode (i.e., a defined clock and voltage level) that minimizes the power draw of the graphics cardall fully transparent to the end user. The GPU also has clock-gating circuitry, which effectively shuts down blocks of the GPU which are not being used at a particular time (where time is measured in milliseconds), further reducing power during periods of non-peak GPU utilization. The ultimate in power savings would be to use use the card on a HybridPower motherboard to disable the card like we showed you in this recent article on HybridSLI and HybridPower.

Final Thoughts

After spending 20 pages talking about the GeForce GTX 280 it seems like we have just scratched the surface of what needs to be said for this card. If you haven’t noticed yet, graphics cards are getting complex. The move beyond just gaming makes it tough to just benchmark a few games and call it the next best thing. The brutal reality is that the industry is changing. Optimized PC design is the way things are moving and you either need to get on the bandwagon or get run over. For years the industry used to be about Enterprise Computing, which was all about productivity. Today, the industry is all about Visual Computing, which is gives creativity and self-expression with things like pictures, movies, gaming and so on. With Windows Vista and Mac OS X operating systems both enabling 3D content we all now have access to visual computing and we are soon to be surrounded by various applications and utilities that take it to the next level like PicLens. If you think about it, we are already well into the change and if you think a graphics card is just for gaming you have a rude awakening coming down the road.

The GeForce GTX 280 is one of the first new graphics card to be designed since the American economy slowed down and you can tell that being ‘green’ played a role in the development of the new GTX 200 series. NVIDIA spent more time than usual tweaking the power saving features of the core and it really shows. NVIDIA did a great job on this and while they added more features and transistors to the core, they managed to reduce idle power consumption and keep load power consumption at a reasonable level. They easily get an “A” in our books for that!

When it comes to gaming performance, the GeForce GTX 280 was the fastest single GPU video card that we have ever tested. It does great and was able to beat the dual-GPU GeForce 9800 GX2 and Radeon HD 3870 X2 in a number of benchmarks. The GeForce 9800 GX2 is still a great graphics card as our gaming performance showed. The GeForce 8800 GTX finally started to show signs of old age and was significantly slower than the GeForce GTX 280 in the benchmarks. If you have a GeForce 8800 GTX, you finally have a single-GPU based card to upgrade to that won’t leave you second-guessing if it is worth it or not.

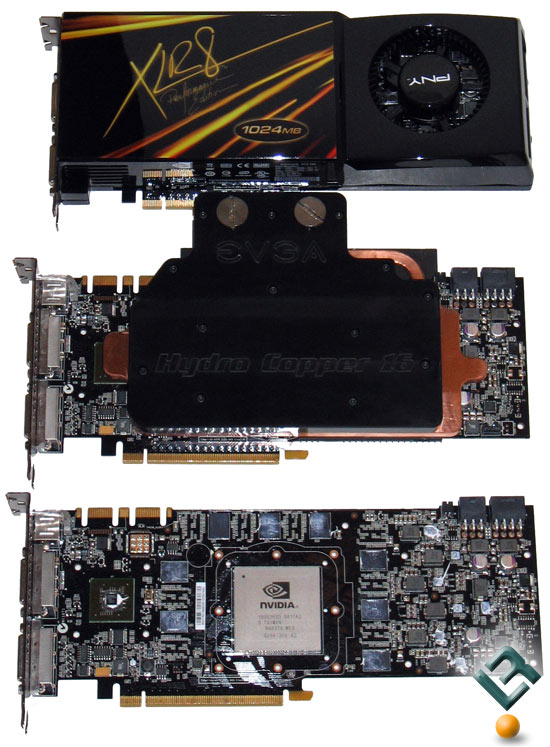

The EVGA GeForce GTX 280 Hydro Copper 16 that we had for testing was a very nice video card. This was a prototype card and we thank EVGA for getting one out to us before the launch of the GTX 200 series. The sad part is that we got that card Saturday afternoon and had to go out of town on Sunday morning for briefings, so we had very little time with it. We tried a couple different water cooling kits, but none of the pumps we had on hand had enough volume to properly cool the GTX 280 core. The 670MHz core clock is impressive and the card was a good 10% faster than the stock clocked GeForce GTX 280. At $879 the EVGA GeForce GTX 280 Hydro Copper 16 won’t be for everyone, but if you can afford it and want water cooling, then this is the card.

The PNY GeForce GTX 280 performed like a champ and is based off the reference design. With a $649 MSRP, the card is still pricey, but no more so than the GeForce 8800 GTX when it came out over two years ago. We ran folding on this card for a few days and fell in love with the performance while gaming and then the points per day it was adding to our folding team.

Overall, the GeForce GTX 280 graphics card was a winner in our books and it made a difference while gaming, which is the most important thing. The game we noticed the performance gains the most was actually Age of Conan when we cranked up the image quality at 1920×1200 resolutions. Age of Conan: Hyborian Adventures passed the astounding ‘One Million Copies Shipped’ milestone in less than three weeks after the game’s launch, so that is a huge potential market in the months to come.

Legit Bottom Line: The NVIDIA GeForce GTX 280 series graphics cards usher in a new era of GPU computing and deliver improved gaming performance to boot. No one loses on this deal except for the competitors (ATI and Intel) if they have no answer.

Comments are closed.