AMD Radeon R9 290X vs NVIDIA GeForce GTX 780 at 4K Ultra HD

Metro Last Light

Metro: Last Light is a first-person shooter video game developed by Ukrainian studio 4A Games and published by Deep Silver. The game is set in a post-apocalyptic world and features action-oriented gameplay with a combination of survival horror elements. It uses the 4A Game engine and was released in May 2013.

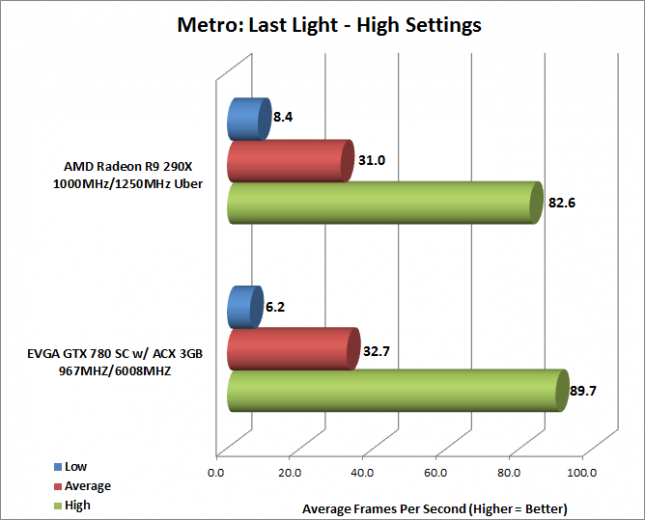

Metro Last Light was benchmarked with High image quality settings

Benchmark Results: In Metro: Last Light the AMD Radeon R9 290X had a lower average FPS, but had better performance when things bottomed out. The EVGA GeForce GTX 780 Superclocked and AMD Radeon R9 290X are pretty evenly matched in this game title.

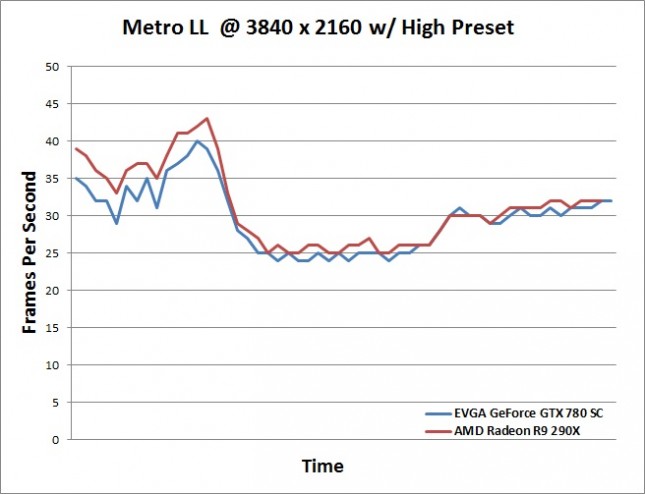

Taking a closer look at the detailed results from the first 90 seconds from our FRAPS log we can see how evenly matched things are. The FRAPS log shows the AMD Radeon R9 290X doing well in the first portion of our benchmark run.